TL;DR a.k.a. Express Tutorial

- Install:

sudo apt update && apt install ccacheor equivalent - First compilation:

ccache cc -o hello.o -c hello.c - Recompilations:

rm hello.o && ccache cc -o hello.o -c hello.c - Statistics:

ccache -sto see how you cache grows after each recompilation

Who will use this cache?

- Single user:

Default config is OK. You are ready to go. Stop reading here.

Multiuser with shared cache:

Create group

ccacheand include users in that group:sudo groupadd ccache for user in alice bob you me; do sudo adduser $(user) ccache doneCreate the cache dir

/var/ccachewith the proper permissions:sudo mkdir -p /var/ccache sudo chgrp ccache /var/ccache && sudo chmod g+s+w /var/ccacheCreate the common config file

/var/ccache.env:cat > /var/ccache.env << __EOF__ CCACHE_DIR=/var/ccache CCACHE_UMASK=002 CCACHE_NOHARDLINK=1 ###CCACHE_NOHASHDIR=1 ###CCACHE_BASEDIR=/home ###CCACHE_DEBUG=1 ###CCACHE_DEBUGDIR=/var/ccache_debug ###CCACHE_LOGFILE=/var/ccache_log/ccache.log ###PATH=/path/to/executable/ccache:$PATH __EOF__The config file may have to be tuned, depending on the compilation:

- All users compile in the same directory (for example

/tmp), and use the same compiler command (for examplecc -o hello.o -c hello.c):

Default settings are OK. - Users compile in different directories (for example

/home/aliceand/home/bob, respectively), but all users use the same compiler command (for examplecc -o hello.o -c hello.c):

UncommentCCACHE_NOHASHDIR=1 - Users compile in different directories, and some compiler command arguments refer include absolute paths to

/home(for examplecc -o /home/alice/hello.o -c /home/alice/hello.c):

UncommentCCACHE_BASEDIR=/home(CCACHE_NOHASHDIRhas no effect whenCCACHE_BASEDIRis set) - The compiler commands include some (weird) USER-based arguments, so arguments will always differ between users (for example

cc -o hello_$USER.o -c hello.c):

Users may never share objects. Re-design your build system.

- All users compile in the same directory (for example

Consider uncommenting the PATH line, to be sure that all users use the same version of

ccache.

Tutorial

This tutorial includes ccache setup instructions, and some test scripts, using ccache in the follow situations:

- With

ccandmake - With

cmakeandninja - Using a shared cache between different users on a host

- Using a shared cache between a "real user" on a host and a "Docker user" inside a container

While ccache itself works on several different platforms, this tutorial should be considered "Linux-biased", especially how to handle users and the Docker stuff.

Requisites

ccache(obviously)asciidoctor(optional, for buildingccachedocs)makecmakeninjabc(for calculating benchmarks)

If you plan to do Docker stuff, you will also need:

docker

Chances are (big) that if you read this, one or more of these tools are already installed on your system.

If not, check your package manager for the most simple installation.

Here is for Ubuntu:

sudo apt update && sudo apt install -y ccache make cmake ninja-build bc

sudo apt update && sudo apt install -y docker # For Docker fans

Links

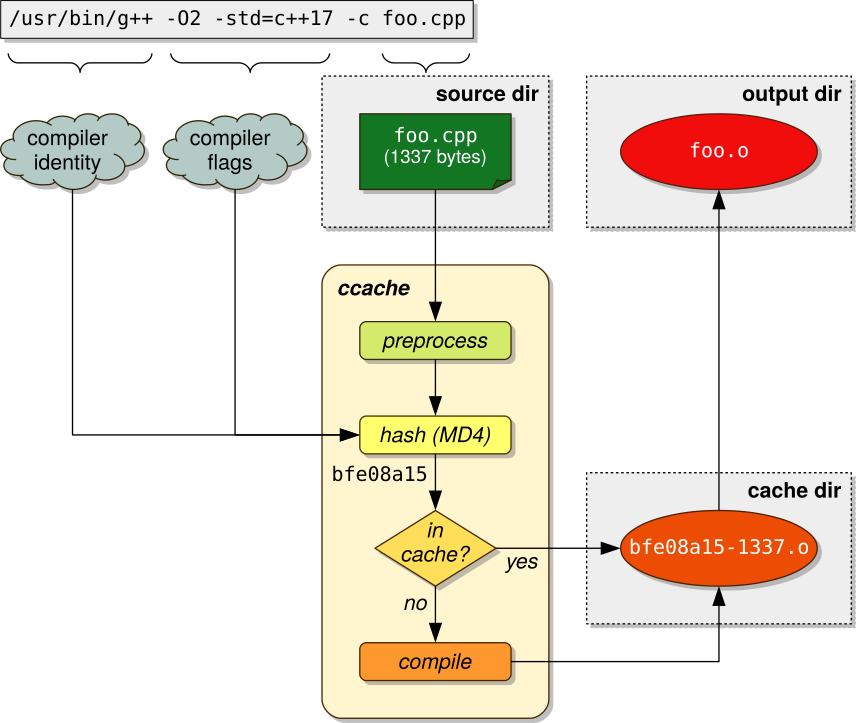

The tutorial describes very well how ccache works internally:

Picture 1: Schematic internal work flow of ccache.

Install ccache

Install from package

Ubuntu/Debian:

sudo apt install ccache # May already be installed

NOTE: Consider installing from source, as the package version of ccache may be quite outdated.

Install from source

Pre-requisite for building

ccachedocs (optional):sudo apt install asciidoctor

Install

ccache"locally" (that is, as non-root, somewhere at$HOME) in theccache-4.9.1/installsubdirectory of<MYROOTDIR>:mkdir <MYROOTDIR> && cd <MYROOTDIR>Either clone this repos and use its Makefile (includes tests):

fossil clone https://kuu.se/fossil/ccache.cgi cd ccache make cleaninstall install clean runOr do a "manual install" (no tests available):

wget https://github.com/ccache/ccache/releases/download/v4.9.1/ccache-4.9.1.tar.gz tar xvfz ccache-4.9.1.tar.gz cd ccache-4.9.1 mkdir build cd build cmake -DCMAKE_INSTALL_PREFIX="$(readlink -f "$(pwd)/../install")" -DCMAKE_BUILD_TYPE=Release .. make make install

Skip the

CMAKE_INSTALL_PREFIXto install system-wide.

Enable ccache

See also: man(1) ccache, the CONFIGURATION section

Enable ccache using $PATH (for direct usage with cc/c++, stand-alone Makefiles etc.):

export PATH="/usr/lib/ccache:$PATH"

Set the ccache directory (optional)

Optionally, configure a dedicated cache directory for this project:

Set:

export CCACHE_DIR="$HOME/my_little_ccache"

Get:

echo $CCACHE_DIR

The cache directory may also be set by using the ccache command-line options.

(Note that the corresponding environment variable has a higher priority, and command-line options may have no effect.)

Set:

# This command only works with CCACHE_DIR *unset*

ccache --set-config=cache_dir=$HOME/my_little_ccache2

Get: (with CCACHE_DIR set)

ccache --get-config=cache_dir

/home/kuuse/my_little_ccache # <--- Gets value from 'CCACHE_DIR'

Get (with CCACHE_DIR unset):

ccache --get-config=cache_dir

/home/kuuse/my_little_ccache2 # <--- Gets value from 'ccache --set-config=cache_dir'

Enable ccache using CMAKE_C_COMPILER_LAUNCHER/CMAKE_CXX_COMPILER_LAUNCHER (for CMake, obviously):

a. Use the required parameters invoked directly from the command-line:

cmake -DCMAKE_C_COMPILER_LAUNCHER=ccache -DCMAKE_CXX_COMPILER_LAUNCHER=ccache ..b. Define a boolean

USE_CCACHEto use from the command-line, and define other parameters inCMakeLists.txt(recommended):cmake -DUSE_CCACHE=1 ..See

big-test/CMakeLists.txtfor details.

Tests

This repo includes a few scripts to run the following tests:

- Small tests, which only purpose is to show that the

ccachecache has been used (that is, thatccachehas received "Hits", inccacheterms). - Bigger tests, with the purpose to measure both benchmarks and hits. This script creates and compiles 1000 files (using

make), or 10000 files (usingcmake+ninja), and compares compilation times without using versus usingccache. - Shared tests among users, less focused on benchmarks, more focused on the "cache integrity" when several users use the same cache, and on hits between users.

One of the shared tests runs a Docker container, to test sharing a cache between "host users" and "Docker container users".

1. Small tests

- 1a. Small test (using the locally installed

ccache): step by step

Start in the ccache directory under the "ccache root" directory (<MYROOTDIR>/ccache in this example):

cd <MYROOTDIR>/ccache

Cleanup and clear the cache just to be sure that it is empty:

rm -rf ~/.ccache ~/.cache/ccache

./ccache-4.9.1/install/bin/ccache --clear

./ccache-4.9.1/install/bin/ccache --clean

Display the cache stats (initial, empty):

./ccache-4.9.1/install/bin/ccache --show-stats

Local storage:

Cache size (GiB): 0.0 / 5.0 ( 0.00%)

Compile an object file:

rm -f small-test/small-test.o

./ccache-4.9.1/install/bin/ccache gcc -c small-test/small-test.c -o small-test/small-test.o

Display the cache stats ("cold cache"):

./ccache-4.9.1/install/bin/ccache --show-stats

Cacheable calls: 1 / 1 (100.0%)

Hits: 0 / 1 ( 0.00%)

Direct: 0

Preprocessed: 0

Misses: 1 / 1 (100.0%)

Local storage:

Cache size (GiB): 0.0 / 5.0 ( 0.00%)

Hits: 0 / 1 ( 0.00%)

Misses: 1 / 1 (100.0%)

The cache stats says:

- There are "0 Hits", which means that `ccache` could not reuse any previously cached object.

This makes sense, as this is the first compilation, so the cache hasn't been used before, and is thus empty.

- There are "1 Misses", which means that `ccache` has stored one new object in the cache for future use.

Recompile the object file:

rm -f small-test/small-test.o

./ccache-4.9.1/install/bin/ccache gcc -c small-test/small-test.c -o small-test/small-test.o

Show how the cache has been affected:

./ccache-4.9.1/install/bin/ccache --show-stats

Cacheable calls: 2 / 2 (100.0%)

Hits: 1 / 2 (50.00%)

Direct: 1 / 1 (100.0%)

Preprocessed: 0 / 1 ( 0.00%)

Misses: 1 / 2 (50.00%)

Local storage:

Cache size (GiB): 0.0 / 5.0 ( 0.00%)

Hits: 1 / 2 (50.00%)

Misses: 1 / 2 (50.00%)

- There are now "1 Hits", which means that `ccache` has reused 1 previously cached object.

- There are still "1 Misses", which just reflects that no more objects have been stored in the cache since the previous compilation.

Recompile the object file some 50 times times and show the cache:

for n in $(seq 1 50); do rm -f small-test/small-test.o; ./ccache-4.9.1/install/bin/ccache gcc -c small-test/small-test.c -o small-test/small-test.o; done

./ccache-4.9.1/install/bin/ccache --show-stats

Cacheable calls: 52 / 52 (100.0%)

Hits: 51 / 52 (98.08%)

Direct: 51 / 51 (100.0%)

Preprocessed: 0 / 51 ( 0.00%)

Misses: 1 / 52 ( 1.92%)

Local storage:

Cache size (GiB): 0.0 / 5.0 ( 0.00%)

Hits: 51 / 52 (98.08%)

Misses: 1 / 52 ( 1.92%)

- The stats basically says: *The code has been recompiled 52 times. The first time, the cached object did not exist (1 Miss), but the subsequent 51 times (Hits), the cached object already existed, and has been re-used.*

- Use `ccache` for *compiling*, not for *linking*

To confirm that `ccache` is aimed for compiling but *not* for linking, link the small program into an executable (also explained [here](https://stackoverflow.com/questions/29828430/what-does-ccache-mean-by-called-for-link)):

rm -f small-test/small-test

./ccache-4.9.1/install/bin/ccache gcc -o small-test/small-test small-test/small-test.o

Show the cache:

./ccache-4.9.1/install/bin/ccache --show-stats

Cacheable calls: 52 / 53 (98.11%)

Hits: 51 / 52 (98.08%)

Direct: 51 / 51 (100.0%)

Preprocessed: 0 / 51 ( 0.00%)

Misses: 1 / 52 ( 1.92%)

Uncacheable calls: 1 / 53 ( 1.89%) # <--- linking NOT supported by 'ccache'

Local storage:

Cache size (GiB): 0.0 / 5.0 ( 0.00%)

Hits: 51 / 52 (98.08%)

Misses: 1 / 52 ( 1.92%)

Similar as done above: Link the executable some 50 times times and show the cache:

for n in $(seq 1 50); do rm -f small-test/small-test; ./ccache-4.9.1/install/bin/ccache gcc -o small-test/small-test small-test/small-test.o; done

./ccache-4.9.1/install/bin/ccache --show-stats

Cacheable calls: 52 / 103 (50.49%)

Hits: 51 / 52 (98.08%)

Direct: 51 / 51 (100.0%)

Preprocessed: 0 / 51 ( 0.00%)

Misses: 1 / 52 ( 1.92%)

Uncacheable calls: 51 / 103 (49.51%) # <--- linking NOT supported by 'ccache'

Local storage:

Cache size (GiB): 0.0 / 5.0 ( 0.00%)

Hits: 51 / 52 (98.08%)

Misses: 1 / 52 ( 1.92%)

- 1b. Small test with local install: using

makeandcmake+ninja, respectively

The scripts in the small-test directory shows some "cache hits", similar to what is described above.

- Using a Makefile:

cd small-test && make clean all run && cd -

- Using `cmake` + `ninja`:

cd small-test ; ./build.sh ; cd -

2. Bigger tests (for benchmarks)

The scripts in the big-test directory perform some benchmarks when using versus not using ccache.

The number of "Cache Hits" are also included in the script output.

ccache is assumed to be included in PATH.

- 2a. Bigger test, using

make

The bigtest-make.sh test script performs the following steps, first without using ccache:

- Create and compile 1000

.cfiles in theautosrcsubdirectory. - Modify 3

.cfiles and recompile, without cleaning.ofiles. This step should be much faster than Step 1, as only 3 out of 1000 files require recompilation. - Clean the

.ofiles and recompile. This step should take about the same time as Step 1.

The 3 steps above are then repeated with ccache enabled:

- Compile and create the ("cold") cache. This step should be slower than Step 1 without

ccache, as the cache has to be populated. - Similar as Step 2 without

ccache, as most files do not require recompilation. - Using the "warm" cache, this is where the speed difference should be shown, compared to Step 3 without

ccache.

To run:

cd big-test ; ./bigtest-make.sh ; cd -

A sample output from the script shows the difference in step 3:

----------------------------------------

WITHOUT 'ccache':

----------------------------------------

1. Compile from scratch : 30.118715216 seconds, hits: 0

2. Recompile, no clean : 4.324113334 seconds, hits: 0

3. Recompile, clean : 29.754029804 seconds, hits: 0

----------------------------------------

WITH 'ccache':

----------------------------------------

1. Compile from scratch : 40.300708266 seconds, hits: 0 <--- FIRST TIME COMPILATION IS SLOWER, AS EACH OBJECT FILE HAS TO BE STORED IN THE CACHE

2. Recompile, no clean : 4.880028223 seconds, hits: 0

3. Recompile, clean : 7.163695150 seconds, hits: 998 <--- ANY SUBSEQUENT COMPILATION IS FASTER, AS THE CACHED OBJECT FILE IS USED INSTEAD OF RECOMPILING

Conclusion:

With this (non-optimized) build script using make, using ccache makes the following difference:

- First time compilation is about 40.30/30.12 =

echo "scale = 2 ; 40.30 / 30.12" | bc -l= 1.33 times slower. Subsequent compilations are about 29.75/7.16 =

echo "scale = 2 ; 29.75 / 7.16" | bc -l= 4.15 times faster.- 2b. Bigger test, using

CMake+Ninja

- 2b. Bigger test, using

The bigtest-cmake-ninja.sh test script is functionally similar to bigtest-make.sh, with the following differences:

Helper tool: This script calls

build.sh [--enable-ccache]as a helper tool, which uses CMake + Ninja to compile one step, optionally withccacheenabled. The following lines are the most important:cmake -GNinja -DUSE_CCACHE=1 .. ninjaNumber of source files: The previous script,

bigtest-make.shinvokes themakeexecutable a 1000 times in a loop (not very optimized), so the script is quite slow. (Not onlymakecan be blamed for that.)

Compared to that,bigtest-cmake-ninja.shis quite optimized, invokingninjaonly once. Besides being optimized, it is well known thatninjacompiles code faster thanmake.

To make the benchmarks easier to distinguish when usingccachewithninja, the number of compiled files has been increased from 1 000 to 10 000.

To run:

cd big-test ; ./bigtest-cmake-ninja.sh ; cd -

A sample output from the script shows a slightly higher gain than when using make:

----------------------------------------

WITHOUT 'ccache':

----------------------------------------

1. Compile from scratch : 69.394286578 seconds, hits: 0

2. Recompile, no clean : 3.824383957 seconds, hits: 0

3. Recompile, clean : 71.783934170 seconds, hits: 0

----------------------------------------

WITH 'ccache':

----------------------------------------

1. Compile from scratch : 124.594061550 seconds, hits: 0 <--- WITH NO HITS, COMPILATION TAKES LONGER TIME, AS EACH OBJECT FILE HAS TO BE STORED IN THE CACHE

2. Recompile, no clean : 3.861029822 seconds, hits: 0

3. Recompile, clean : 11.009750885 seconds, hits: 10002 <--- HERE IS THE BIG GAIN

Conclusion:

With this (quite optimized) build script using cmake + ninja, using ccache makes the following difference:

- First time compilation is about 124.59/69.39 =

echo "scale = 2 ; 124.59 / 69.39" | bc -l= 1.79 times slower. - Subsequent compilations are about 71.78/11.00 =

echo "scale = 2 ; 71.78 / 11.00" | bc -l= 6.52 times faster.

3. Shared tests (for hits among users)

The purpose of a shared cache between users is that one user may reuse cached objects created by another user.

Picture 2: Shared ccache cache among users.

In the shared-test subdirectory, there are some test scripts for running a shared cache, but before going into the script details, let's review the setup and check out for potential issues.

Setup resume for a shared cache between users

- Create a common group on the host, for example

ccache(this may bedevelor whatever). - Include all users/developers who will use the cache in the group

ccache(or whatever group name was chosen). - Create a cache directory, for example

/var/ccache, owned by the newly created group. - Create a common config file,

/var/ccache.env, with a list of environment variables, to besource:ed by each users at login or before compiling.

The common config file is optional, but highly recommended, to guarantee thatccacheis always used with the same environment variables for all users. - Optionally, tweak

/var/ccache.envfor "compile-dependent" issues, as described below. - Optional, for

makeandccusers: Create an additional file,/var/ccache/env/ccache-for-make-and-cc.env, with the additional lineexport PATH="/usr/lib/ccache:$PATH".

(This file is not needed forcmakeusers, ascmake"knows" how to setupccachewithout environment variables.) - Add some simple checks to any existing build scripts, to avoid unpleasant side effects.

This step is optional, but recommended.

Potential "compile-dependent" issues with a shared cache

Depending how users compile the code, /var/cache.env may have to be tweaked to make things work as expected (that is, getting hits between users).

To calculate the hash for a cache object, besides the file content itself (the source code), these are the factors that affect the hash calculation:

- The compiler command arguments:

Are the compiler arguments the same for all users, or do they include absolute paths referring to, for example,/home/$USER? - The CWD (Current Working Directory):

Do users use different CWD when they compile? (normally they do) - *Compiler details, as described here*:

All users must use the same compiler (same version, same installation timestamp, etc).

Here are 4 different situations for shared compilations, 3 of them with a solution:

All users compile in the same directory (for example

/tmp), and use the same compiler command.

Sample output:cd /tmp pwd # Always /tmp cc -o hello.o -c hello.cThis is probably not a very common situation.

Solution: Default settings are OK.

Users compile in different directories (for example

CWD=/home/aliceandCWD=/home/bob, respectively), but all users use the same compiler command.

Sample output:pwd # May vary cc -o hello.o -c hello.cCWD is normally included in the hash calculation hash for a cache object, but may be disabled by setting

hashdir=0.

This is probably the most common solution for a shared cache. For example, compiler commandscmakegenerated by are (if possible) always user-independent.Solution: Uncomment

CCACHE_NOHASHDIR=1Users compile in different directories, and some compiler command arguments refer include absolute paths to

/home.

Sample output:Compiling as 'alice', CWD=/home/alice/project1/build: ccache cc -o /home/alice/project1/build/hello.o -c /home/alice/project1/hello.c Compiling as 'bob', CWD=/home/bob/stuff/project1/build: ccache cc -o /home/bob/stuff/project1/build/hello.o -c /home/bob/stuff/project1/hello.c <--- NOT SAME COMMAND AS FOR ALICE !When

basediris set, compiler command arguments containing absolute paths the matches the config value (for example,/home), paths are rewritten to relative paths, based on CWD.

(CWD itself is not included in the hash whenbasediris set.)

So even if users use different directory levels, the resulting compiler command is the same.

The resulting compiler commands:Compiling as 'alice', CWD=/home/alice/project1/build: ccache cc -o ./hello.o -c ../hello.c Compiling as 'bob', CWD=/home/bob/stuff/project1/build: ccache cc -o ./hello.o -c ../hello.c <--- SAME COMMAND AS FOR ALICE !This is probably a less common situation, as most modern build tools avoid creating build arguments having absolute paths.

Anyway, if that is the case, this is the solution.Solution: Uncomment

CCACHE_BASEDIR=/homeThe compiler commands include some (weird) USER-based arguments, so compiler command arguments will always differ between users (for example

cc -o hello_$USER.o -c hello.c):

Sample output:Compiling as 'alice': ccache cc -o /home/alice/hello_alice.o -c /home/alice/hello.c Compiling as 'bob' ccache cc -o /home/bob/hello_bob.o -c /home/bob/hello.cThis is probably a very uncommon situation.

No solution: Users may never share objects. Re-design your build system, or let each user setup their own private cache.

NOTE:

A mis-configured shared cache that isn't available to cache objects among users, will probably still work as an individual cache for each user.

This may give the developer the sensation that the shared cache "is working", as there still are hits (but not between users).

The developer should always check (and possible debug) the cache to be sure it works as intended.

Recommendations from the ccache development team

For the environment variables and the script checks, let's follow man (1) ccache instructions for sharing a cache:

A group of developers can increase the cache hit rate by sharing a cache directory. To share a cache without unpleasant side effects, the following conditions should to be met:

Use the same cache directory.

echo 'export CCACHE_DIR=/var/ccache' >> /var/ccache.envMake sure that the configuration setting

hard_linkis false (which is the default).

echo 'export CCACHE_NOHARDLINK=1' >> /var/ccache.envMake sure that all users are in the same group.

sudo groupadd ccache && sudo adduser $(whoami) ccache

See also the script checks below.Set the configuration setting umask to 002. This ensures that cached files are accessible to everyone in the group.

echo 'export CCACHE_UMASK=002' >> /var/ccache.envSee also the script checks below.Make sure that all users have write permission in the entire cache directory (and that you trust all users of the shared cache).

sudo chgrp ccache /var/ccache && sudo chmod g+s+w /var/ccacheMake sure that the setgid bit is set on all directories in the cache. This tells the filesystem to inherit group ownership for new directories. The following command might be useful for this:

find $CCACHE_DIR -type d | xargs chmod g+s

See also the script checks below.

- You may also want to make sure that a base directory is set appropriately, as discussed in a previous section.

Check out "compile-dependent" issues to see if one of the following settings needs to be added:

either (more likely)...

echo 'export CCACHE_NOHASHDIR=1' >> /var/ccache.env

...or (less likely):

echo 'export CCACHE_BASEDIR=/home' >> /var/ccache.env

The script checkers

Recommended to be run before any compilation.

Check for existing cache directory and config:

CCACHE_CACHE_DIR=/var/ccache CCACHE_ENV_FILE=/var/ccache.env" if [ -d "${CCACHE_CACHE_DIR}" ] && [ -f "${CCACHE_ENV_FILE}" ];then # OK, set 'ccache' environment variables source /var/ccache.env else # NOK, show error message printf "WARNING: The 'ccache' cache dir and/or the 'ccache' config file does not exist. A minimal setup:\n" printf "sudo mkdir -p %s %s\n" "$CCACHE_CACHE_DIR" printf "sudo touch %s\n" "$CCACHE_ENV_FILE" printf "find %s -type d | xargs chmod g+s\n" "$CCACHE_CACHE_DIR" CCACHE_WARNING_MISSING_CACHE_DIR_OR_ENV_FILE=1 fiCheck for existing

ccachegroup:if [ -z $(getent group ccache) ];then printf "WARNING: The group 'ccache' does not exist, so the compilation will not be cached properly, or will simply fail! To create the group:\n" printf "sudo groupadd ccache\n" CCACHE_WARNING_MISSING_GROUP=1 fiCheck for current user being in the group

ccache:if $(groups | tr ' ' "\n" | grep -q '^ccache$');then printf "WARNING: User '%s' is not in group 'ccache', so the compilation will not be cached! To add this user to group 'ccache', type:\n" "$(whoami)" printf "sudo adduser %s ccache\n" "$(whoami)" printf "TIP: Logout/login (or similar) to pickup the 'adduser' changes!\n" CCACHE_WARNING_USER_NO_IN_CCACHE_GROUP=1 fiCheck for incorrect

umask:if [ "$CCACHE_UMASK" != "002" ] && [ "$CCACHE_UMASK" != "0002" ];then printf "WARNING: The umask is set to '%s' instead of to '0002', so the compilation cache may misbehave! To change umask:\n" printf "umask 002\n" CCACHE_WARNING_WRONG_UMASK=1 fi

Shared cache: The final setup

Create the group:

sudo groupadd ccacheAdd the current user to the group:

CMD="adduser $(whoami) ccache" sudo $CMD

TIP: This one bit me: Either exec su -l $USER or sudo login or logout from the shell to reflect that the current user now also belongs to the ccache group.

- Create the shared

ccachecache directory and the config file:

As root:

sudo mkdir -p /var/ccache

sudo chgrp ccache /var/ccache

sudo chmod g+s+w /var/ccache

As root user, create the config file:

cat > /var/ccache.env << __EOF__

export CCACHE_DIR=/var/ccache

export CCACHE_UMASK=002

export CCACHE_NOHARDLINK=1

export CCACHE_NOHASHDIR=1

###export CCACHE_BASEDIR=/home

###export CCACHE_DEBUG=1

###export CCACHE_DEBUGDIR=/var/ccache_debug

###export CCACHE_LOGFILE=/var/ccache_log/ccache.log

__EOF__

Optionally, create an alternative config file, if only cc, c++, or stand-alone Makefiles will be used:

cat > /var/ccache-for-make-and-cc.env << __EOF__

# Common 'ccache' environment variables

source /var/ccache.env

# Environment variable for use with cc/c++/Make

export PATH="/usr/lib/ccache:\$PATH"

__EOF__

If you don't plan to use Docker containers, this is it.

Happy shared compiling!

TIP: For a quick start, check out the scripts in the shared-test directory.

The scripts (shared-tests/ subdirectory)

The shared-test scripts basically perform tests for "hits/misses" using a shared cache.

Prerequisites:

- Theses scripts require root access.

It is convenient to create a couple of test users, and include then in the

ccachegroup:sudo groupadd ccache sudo useradd --create-home --groups ccache --password \$(openssl passwd -1 alice) alice sudo useradd --create-home --groups ccache --password \$(openssl passwd -1 bob) bob

NOTE:

Creating two users is normally enough for testing. The same two users may be repeated (alternated) as many times as wanted from the command-line:

sudo ./<SHAREDTEST-SCRIPT>.sh USER1 USER2 USER1 USER2 ...

For example:

sudo ./sharedtest-bigtest.sh alice bob alice bob

3a: Similar to the

big-testscript, compiling as different usersCheck that the number of cache hits grows as expected when using a shared cache:

sudo ./sharedtest-bigtest.sh USER1 USER2 ...3b: Compile as different users, but in the same directory

/tmpCheck that the default

ccachesettings works as expected when different users compile in the same directory:sudo ./sharedtest-hits-with-default-settings.sh USER1 USER2 ...3c: Compile in each user's HOME directory

Check that setting

CCACHE_NOHASHDIR=1works as expected when different users compile in different directories, but without using absolute paths in the compiler arguments:sudo ./sharedtest-hits-with-nohashdir.sh USER1 USER2 ...3d: Compile in each user's HOME directory using absolute paths

Check that setting

CCACHE_BASEDIR=/homeworks as expected when different users compile in different directories, using absolute paths in the compiler arguments:sudo ./sharedtest-hits-with-basedir.sh USER1 USER2 ...3e: Simulate a badly-designed build system, having some odd USER-dependent paths

This script shows an example of a (badly-designed) build system which never will be able to share cache objects among users:

sudo ./sharedtest-misses-no-solution.sh USER1 USER2 ...

Results (shared tests)

Example output from any script except sharedtest-misses-no-solution.sh, re-compiling 48 object files without any changes:

The cache is working properly, as Bob may reuse cached objects created by Alice.

#================================================================================

# User: alice, Hits: 0 (0)

# User: alice, Hits: 48 (48)

# User: alice, Hits: 96 (96)

# User: bob, Hits: 144 (144)

#================================================================================

Example output from sharedtest-misses-no-solution.sh, re-compiling 48 object files without any changes:

The cache is working properly for Alice as an individual cache, but Bob has to create his own cached object, as he may NOT reuse the cached objects created by Alice.

#================================================================================

# User: alice, Hits: 0 (0)

# User: alice, Hits: 48 (48)

# User: alice, Hits: 96 (96)

# User: bob, Hits: 96 (144)

#================================================================================

Docker-related test

The scripts the docker-test directory (similar to shared-test scripts) are used to address Docker-related issues that may occur when using a shared cache.

Picture 3: Shared ccache cache, including a "Docker user".

Important issues when using a Docker with a shared cache:

1. Inside a Docker container, do NOT compile as root!

Compiling as root on any host is not recommended, and hardly ever necessary, and the same applies to a Docker container.

Unfortunately, unless configured, the default user inside a Docker is root.

The solution is to always pass "our own" User ID as a Docker build argument, so the container is running that ID (instead of running it as root).

The created user inside the Docker can have any name, but the important detail is that the UID/GID is the same as for the user on the host.

This will result in compilations inside or outside the container being transparent to other users sharing the cache.

They will only see the "host User ID" in the cache.

On the other hand, compiling as root will most probably mess up the shared cache.

2. Be sure that the same compiler version is used on the host and inside the Docker container.

As already mentioned, the common hash information for ccache includes compiler version.

That means that all users must use the same compiler when sharing a cache.

This is not much of an issue when sharing a cache on a host, as the users probably use the same (one and only installed) compiler anyway.

Anyhow, if a copy of the compiler is installed in each existing Docker container, it is important that all copies of the compiler are exactly the same version for the shared cache to work.

Mounting the compiler executable and libraries from the host is probably not a good idea.

Even if this guarantees all Docker containers to use exactly the same compiler version, this makes the container " dependent" on the host, not being "stand-alone" (see below).

3. If used in a CI environment, the Docker container should probably be able to work as a stand-alone unit, also without ccache.

Docker containers are frequently used in Continous Integration ("Nightly Builds" etc).

These containers are build in an environment "from scratch", where no shared cache is (nor should be) available.

A properly designed build system should be able to work smoothly either with or without ccache available.

See small-test/CMakeLists.txt for an example how CMake uses ccache conditionally, but also works if ccache is not available.

As mentioned above, no mount volumes are normally available in a CI environment, so the Docker image should include its own copy of the compiler.

4. It is probably a good idea to mount the ccache executable from the host, to make it "optionally available".

As discussed above, the compiler should normally not be mounted from the host into the Docker container.

Anyhow, in the case of ccache, it is probably better to mount the executable from the host instead of installing a copy into the Docker image, for the following reasons:

- Installing a copy into a Docker image of

ccachefrom a package (i.e.apt install ccache) probably provides an outdated version ofccache. - Compiling and installing a copy into a Docker image of

ccachefrom source increases the Docker build time unnecessarily. - The compiler is used in all environments, so it makes sense to include the compiler in the Docker image.

Anyhow,ccacheis not used in a CI environment, so it would better be "optionally available".

That is, when compiling locally,docker run ...can be run with the option to mount theccacheexecutable from host, to use a shared cache.

In the CI environment,docker run ...can be run without mounting theccacheexecutable, ignoring anyccache-related stuff. NOTE: When mounting a

ccacheexecutable compiled from source on the host, remember to linkhiredisstatically, as described in the ccache install document.

Otherwise, executingccachefrom within the Docker container will fail:ccache: error while loading shared libraries: libhiredis.so.0.14: cannot open shared object file: No such file or directory

Docker-and-permissions related links

- https://web.archive.org/web/20200131005945/http://frungy.org/docker/using-ccache-with-docker/

- https://vsupalov.com/docker-shared-permissions/

- https://stackoverflow.com/questions/27701930/how-to-add-users-to-docker-container

- https://betterprogramming.pub/running-a-container-with-a-non-root-user-e35830d1f42a

- https://code.visualstudio.com/remote/advancedcontainers/add-nonroot-user

Setup resume for a shared cache when using Docker

- Use the very same config file

/var/ccache.envas for shared users.

The Docker option--env-file /var/ccache.envmay be used for this. NOTE: The Docker--env-fileoption does NOT accept theexport KEY=VALUEsyntax, that is, with theexportkeyword present.

Invalid for Docker:

export CCACHE_DIR=/var/ccache # <--- OK FOR SCRIPTS/BASH/ETC, NOK FOR DOCKER!!!

Valid syntax both for Bash shell and Docker env:

cat > /var/ccache.env << __EOF__

CCACHE_DIR=/var/ccache

CCACHE_UMASK=002

CCACHE_NOHARDLINK=1

###CCACHE_NOHASHDIR=1

###CCACHE_BASEDIR=/home

###CCACHE_DEBUG=1

###CCACHE_DEBUGDIR=/var/ccache_debug

###CCACHE_LOGFILE=/var/ccache_log/ccache.log

###PATH=/path/to/executable/ccache:$PATH

__EOF__

Prepare four almost identical

Dockerfile:s (see below).

OneDockerfileis designed to run the container asroot, but changes user for "the compilation part" (the least recommended way).

The other threeDockerfile:s are designed to run the container as a non-root user.

The "real" user'sUIDand theGIDforccacheshould always be used as arguments, so that the Docker uses accesses the cache with the sameUID/GIDas the "real" user.

The only difference between theseDockerfile:s is the origin ofccache:- installed from

apt - installed from source (inside the Docker image)

- the

ccacheexecutable mounted as a volume from the host

- installed from

Mount the host's

/var/ccacheas a Docker volume when starting the Docker container so the cache is accessible from inside the container.Optionally, mount

/path/to/executable/ccacheto guarantee that the sameccacheversion is used both inside and outside the Docker.

This is done in one of the fourDockerfile:s below.Check that the user running the

dockercommands is included both in theccacheand thedockergroups.Check that all Docker images use the same compiler version.

The Dockerfiles

The files can be generated with this script:

cd docker-test && ./create-dockerfiles.sh

Alternatively, run cd docker-test && make clean all run to:

- Create the Dockerfiles

- Build the Docker images

- Run the Docker containers

Dockerfile 1 (the least recommended): The root Dockerfile

See description above.

Dockerfile 2 (the third best): The non-root Dockerfile

NOTE:

The last line in this Dockerfile...

USER ccache

... guarantees that the Docker container is run as a non-root user. This is the recommended way. And the safest. And the easiest.

Dockerfile 3 (normally the second best): The non-root Dockerfile, with ccache built from source (otherwise identical to option 2)

NOTE:

Building the ccache executable inside the host makes ccache work even if not installed on the host.

Anyhow, under normal circumstances, it is desirable to use ccache both on the host and inside the Docker container, to take the biggest advantage of the shared cache.

Building a separate ccache copy inside the container may also version conflicts between the host and the Docker container, so installing ccache on the host and mounting the executable (see Option 4 below) is probably the preferrable way for most situations.

Dockerfile 4 (normally the best): The non-root Dockerfile, with ccache mounted from the host (otherwise identical to option 2)

NOTE:

Mounting the ccache executable from the host avoids version conflicts between the host and the Docker container.

The Docker image build is also faster than option 3.

Anyhow, keep in mind that this option requires ccache to be installed on the host.

Also, don't forget to use a ccache executable with hiredis statically linked, as described in the ccache install document.

Build the four Docker images

The Docker images can be built with this script:

cd docker-test && ./create-dockerfiles.sh && ./build-docker-images.sh

Alternatively, run cd docker-test && make clean all

Wrapper for non-root tasks (only needed if the Docker container is run as root):

%%% TODO: TEST THIS WRAPPER!

build-as-non-root.sh

--------------------------------------------

if [ $(id -u) -eq 0 ];then

# User is root, change to 'ccache' user

sudo -u ccache bash -c './build.sh'

else

# User is non-root, execute as normal

./build.sh

fi

--------------------------------------------

Health check (only needed if the Docker container is run as root - use with care)

stat -c "%U" $CCACHE_DIR # Should return 'ccache', not 'root'

stat -c "%G" $CCACHE_DIR # Should return 'ccache', not 'root'

if [ "$(stat -c "%U" $CCACHE_DIR)" != "ccache" ] || [ "$(stat -c "%G" $CCACHE_DIR)" != "ccache" ];then

chgrp ccache $CCACHE_DIR

find $CCACHE_DIR -type d | xargs chmod g+s+w

fi

Run the containers:

The Docker containers can be run with this script:

cd docker-test && ./create-dockerfiles.sh && ./build-docker-images.sh && ./run-docker-containers.sh

Alternatively, run cd docker-test && make clean all run

%%% TODO: TEST SCRIPTS INSIDE DOCKER!

Conclusion:

- Adding a non-root user to a Docker container makes it perfectly possible to share a

ccachecache between users on the host and a Docker user. - Using Dockers or not is completely transparent when using a shared

ccachecache with other users on the same host. No config changes toccacheare required, as other users only "see" the "real" User ID, independently if the current user compiles inside or outsode a Docker container.

The script (Docker)

The sharedtest-docker.sh script will perform the following steps for each user:

- Run the compile script on the host, once for each user, in the user's HOME directory (the same as

sharedtest-hits-with-nohashdir.sh). - Besides running the compile script:

- a. Run the "non-root" Docker container, and run the compile script inside that container.

- b. Run the "root" Docker container, and run the compile script inside that container, as the non-root user.

The 3 steps are expected to add the same number of hits to the cache.

TODO: Test installing an older compiler version in a running Docker container, with a "warm" shared cache.

Configure to use the older version by default, as described here:

sudo apt-get install gcc-4

sudo update-alternatives --install /usr/bin/gcc gcc /usr/bin/gcc-4 4

sudo update-alternatives --config gcc

When compiling using the older version, ccache should NOT detect hits when user the older compiler, as different compiler version have been used, even if the compiler command and arguments are exactly the same.

Results (Docker)

TODO: %%% TEST RESULTS FROM sharedtest-docker.sh

Cache debugging

Besides ccache -s (which is probably your best friends for debugging), consider uncommenting these lines in /var/ccache.env:

###export CCACHE_DEBUG=1

###export CCACHE_DEBUGDIR=/var/ccache_debug

###export CCACHE_LOGFILE=/var/ccache_log/ccache.log

The CCACHE_DEBUGDIR directory tree may indicate why two users created an cache object each (2 misses) for the same compilation command, instead of a shared object (1 miss, 1 hit).

Where to go from here

ccache + cHash

With no doubt, ccache makes a very good job to speed up compilation.

Anyhow, there are situations where the compilation time could be even more optimized.

Here is one example:

Change a comment which exists in all source code files in a project, let's say the copyright year.

As only a comment were changed, the compiled code would be identical as before, but the hash for each file has changed, so ccache decides it is a "Miss", and recompiles all files in the entire project anyway.

Here is where the compiler plugin cHash kicks in (text copied from the cHash GitHub project page:

- GNU make itself detects situations, where no source file has a newer timestamp than the resulting object file and avoid the compiler invocation at all.

- CCache calculates a textual hash of the preprocessed source code and looks up the hash in an object-file cache. Here, at least the C preprocessing has to be done (expand all the #include statements).

- cHash: We let the compiler proceede even further and wait for the C parsing to complete. With a compiler plugin, we interrupt the compiler after the parsing, calculate a hash over the abstract syntax tree (AST) and decide upon that hash, if we can abort the compiler and avoid the costly optimizations.

Links:

- https://github.com/luhsra/chash

- https://lab.sra.uni-hannover.de/Research/cHash/

- https://www.betriebssysteme.org/wp-content/uploads/2017/11/c.dietrich-vortrag.pdf

ccache alternatives

Other cache tools, out of this scope, but worth mentioning: